2020年8月7日にトレードステーションが終わった。

今年(2022年)はシストレ魂が終わった。

シストレ魂利用者へ残念なお知らせが届きました。

【重要】サービス終了のお知らせ

シストレ魂 運営事務局です。

11:54 PM · Mar 10, 2022

現在「イザナミ」「システムトレードの達人」の二強だけど、「システムトレードの達人」は終了予定とも言っていた(現在ツイートが消えている)。

西村剛@証券アナリスト @nishimuraFT

今日斉藤さんとも相談して、今年のどこかのタイミングでシス達の販売を終了することが決まりました。

#システムトレードの達人

午前1:56 · 2020年1月26日·

もう時代が変わりつつあるんだろうね。

【現在の最新状況】

| ツール名 | コメント | 利用者名 |

|---|---|---|

| TradingView | 世界中で利用されている | |

| イザナミ | 日本では最もポピュラーなツール | koz-kay氏、ニッパー氏、hamhamseven氏、アイアン鉄氏、Sand氏、シスヤ氏、トレシズ氏、スカイダイヤ氏・・多数 |

| システムトレードの達人 | 斎藤正章氏が製作 | 夢幻氏、紫苑氏、ゆうし、中原良太氏、スカイダイヤ氏 |

| 【終了】シストレ魂 | 中村義和氏が製作 | ゆう氏、Mariku氏、スカイダイヤ氏 |

| 【終了】トレードステーション | マネックス証券で利用 | テスタ氏、 |

| 【終了?】iTRADE | 重鎮 野川徹氏が製作 | 押田庄次郎氏、スカイダイヤ氏 |

| 【OSS】OmegaChart | 岡嶋大介氏が製作。後に第1回カブロボ・コンテストで野村総研特別賞受賞 | 2ちゃんねらー? |

| 【OSS】Protra | Daisuke Arai氏が作成。panacoran氏がメンテナンス | かぶみ氏、 |

| 【終了?】パイロン | 小次郎氏とN氏 | Hiro氏、 |

| 【終了?】Tactico (タクティコ) | 岡嶋大介氏が製作。「OmegaChart」の後継 | |

| 【終了?】検証くん | 保田望氏が製作 |

システムトレードの時代が終わりに近づいているんだと思うよ。

たとえば、

「25日移動平均線が○%以下で、

かつ、60日移動平均線が○%以下、

かつ、RSIが○○%で、

かつ、ボリンジャーバンドが○○で、

そして、MACDが○○。

そして、足組みがはらみ線になっていて、

出来高が前日に比べて××。

さらに・・・」

なんていう、条件が10も20もあるようなルールを作って勝てる意味が分からない。

そんなこと考えてる間に投資信託に預けるのが成功の時代だからね。

| タイプ | 現商品 | 説明 |

|---|---|---|

| 旧タイプ | 個別株やFX | 10年前のスタンダード。インデックスに反発や憧れや色んな感情を持ってる |

| 新タイプ | インデックス | 最近始めた人は皆これ。苦労も知らずに最適解にたどり着きラッキー |

| ハイブリッド | インデックス&個別 | 昔FXや個別で大損して、インデックスに漂着 |

インデックス投資で年利10%以上でてるよ?

とはいえ、自分の知識を上げて売買できないと資金は凍結になっちゃうし、売買頻度を上げて利益拡大はできない。

今回は基本に戻って日経平均株価の上下を予測してみる。

AIで日経平均を予想するサービス調査

日経平均予想サイトは沢山あるようだ。

【無料】

【有料】

最後のリンクの人の考えは面白い。

- 1570 (NEXT FUNDS) 日経平均レバレッジ上場投信

- 1357 (NEXT FUNDS) 日経ダブルインバース上場投信

を買って儲けを最大化するAIを作っている。

要するに、株価は必ず上がり下がりがあるので、どちらかを始値で買い(寄付成行(寄成))、終値で売る(引成行(引成))ことで出る利益の最大化を目指す。

更に、今の時代は日計り信用取引になれば取引手数料、金利・貸株料ともに0円。

AIで【1570】か【1357】かを予想する

日経平均株価「始値 → 終値」の上下をAIが予測。

それにより1570か1357を[寄り]で買って[引け]で売る簡単デイトレードのバックテストをしてみよう。

ただし、1570(日経平均レバレッジ上場投信)は2012年4月12日、1357(日経ダブルインバース上場投信)は2017年5月8日からしかデータは無い。

機械学習用のデータは次の32個を用意した。

【通常(システムトレード用)データ】

全部で7個。

- 日付、始値、高値、安値、終値、出来高、曜日

【テクニカル指標】

全部で34個。計算して求めている。

- 移動平均(3日、15日、50日、75日、100日)

- ボリンジャーバンド(σ1、σ2、σ3)

- MACD(シグナル、ヒストグラム)

- RSI(9日、14日)

- ADX(平均方向性指数)

- CCI(商品チャンネル指数(Commodity Channel Index))

- ROC(rate of change)

- ADOSC(チャイキンオシレーター:A/DのMACD)

- ATR(Average True Range)

- 移動平均乖離率(5日、15日、25日)

- 前日比(1日、2日、3日)

- VR(Volume Ratio)

- 日経225騰落レシオ(1日上げ/下げ/Ratio、5日上げ/下げ/Ratio、25日上げ/下げ/Ratio)

なお、騰落レシオは以前はProtraを使って計算させていたが、今回はPython内で解けるように変更した。

で結果の「AUC score」=0.499045……

AIの逆をやった方が当たってるわww

因みに機械学習が重要だと判断した指標は次のとおり。

前日、2日前、3日前の差分情報、乖離率が上位。

バックテスト結果

ここがProtraを使った私のサイトの売りの一つだったのに

Protraは同日の同一銘柄の売買が計算できない

致命的過ぎるのでProtraで売買のログだけ出力して、パースしてExcelで計算してる。

計算時間は数分(省略)。

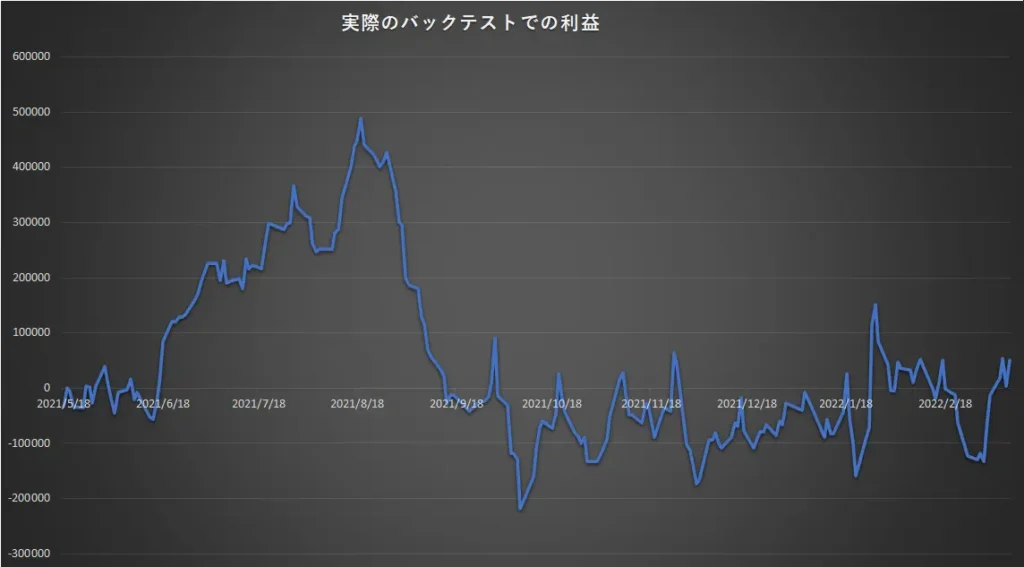

利益曲線は次の通り。

単調増加に見えるが、2021/05/17~2022/03/11本当の利益は次の通り。

ものの見事にボロボロだなーーー。

とはいえ、過去のデータを使って50日分予測しているからボロボロだけど、前半時期はある程度上昇している。

結果をまとめると次のような感じかな。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

株価データ: 日足 銘柄リスト: 1570, 1357 2021/05/17~2022/03/11における成績です。 ---------------------------------------- 全トレード数 199 勝ちトレード数(勝率) 113(56.78%) 負けトレード数(負率) 86(43.22%) ---------------------------------------- 全トレード平均利益 ¥257 純利益 ¥51,339 プロフィットファクター 1.01 ---------------------------------------- |

まとめ

カーブフィッティングしている。

サイコロを転がした方が当たってるじゃん。

今までやってた私の機械学習レベルなんてこんなもんだろうね。

てか、日経平均の予測って過去のデータから正しくできるの?

一民間組織(日本経済新聞社)が、

4000以上の銘柄から225銘柄を抽出し、

ほぼ毎年入れ替えて、

業界枠のために活発な企業は含まない(こともある)

それが「日経平均」の正体。

つまり10年前の日経平均データを使って今の日経平均を単純に予測しても全く意味がないと思うよ。

ソースコード

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 351 352 353 354 355 |

import os import gc import time import pickle import optuna import numpy as np import pandas as pd import seaborn as sns import matplotlib.pyplot as plt from sklearn import metrics from lightgbm import LGBMClassifier from sklearn.metrics import roc_auc_score from sklearn.metrics import accuracy_score, roc_curve from sklearn.model_selection import KFold, StratifiedKFold from contextlib import contextmanager from nehori import tilib from nehori import protra g_path = "C:\\Users\\XXXX\\stock\\Protra" @contextmanager def timer(title): t0 = time.time() yield print("{} - done in {:.0f}s".format(title, time.time() - t0)) # CSVの読み込み(利用しない) def read_csv2(stock_id, skiprows, skipfooter): file = "tosho/" + str(stock_id) + ".csv" if not os.path.exists(file): print("[Error] " + file + " does not exist.") return None, False else: return pd.read_csv(file, skiprows=skiprows, skipfooter=skipfooter, engine="python", names=("date", "open", "high", "low", "close", "volume"), # For "ValueError: DataFrame.dtypes for data must be int, float or bool." dtype={'open': float, 'high': float, 'low': float, 'close': float, 'volume': float} ), True # Protraからの直接読み込み def read_protra_stock(stock_id, skiprows, skipfooter): global g_path p = protra.PriceList(g_path) l_2d = p.readPriceList(stock_id) if (skiprows != 0): del l_2d[:skiprows] if (skipfooter != 0): del l_2d[-1 * skipfooter:] df = pd.DataFrame(l_2d, columns=("date", "open", "high", "low", "close", "volume")) df = df.astype({'open': float, 'high': float, 'low': float, 'close': float, 'volume': float}) return df, True # 概要出力 def display_overview(df): # それぞれのデータのサイズを確認 print("The size of df is : "+str(df.shape)) # 列名を表示 print(df.columns) # 表の一部分表示 print(df.head().append(df.tail())) # 予測値(当日の終値 - 始値 >= 0か?) def get_target_value(df): df['target'] = df['close'].shift(-1) - df['open'].shift(-1) df.loc[(df['target'] >= 0), 'target'] = 1 df.loc[(0 > df['target']), 'target'] = 0 return df # データ前処理 def pre_processing(df): # 目的変数(*日後の始値の上昇値) df = get_target_value(df) # 曜日追加 df['day'] = pd.to_datetime(df['date']).dt.dayofweek # 新特徴データ df = tilib.add_new_features(df) # 欠損値を列の1つ手前の値で埋める df = df.fillna(method='ffill') return df # feature importanceをプロット def display_importances(feature_importance_df_): cols = feature_importance_df_[["feature", "importance"]].groupby("feature").mean().sort_values(by = "importance", ascending = False)[:40].index best_features = feature_importance_df_.loc[feature_importance_df_.feature.isin(cols)] plt.figure(figsize = (8, 10)) sns.barplot(x = "importance", y = "feature", data = best_features.sort_values(by = "importance", ascending=False)) plt.title('LightGBM Features (avg over folds)') plt.tight_layout() plt.savefig('lgbm_importances01.png') # ROC曲線をプロット def display_roc(list_label, list_score): fpr, tpr, thresholds = roc_curve(list_label, list_score) auc = metrics.auc(fpr, tpr) plt.plot(fpr, tpr, label='ROC curve (area = %.2f)'%auc) plt.legend() plt.title('ROC curve') plt.xlabel('False Positive Rate') plt.ylabel('True Positive Rate') plt.grid(True) # Optuna(ハイパーパラメータ自動最適化ツール) class Objective: def __init__(self, x, y, excluded_feats, num_folds = 4, stratified = False): self.x = x self.y = y self.excluded_feats = excluded_feats self.stratified = stratified self.num_folds = num_folds def __call__(self, trial): df_train = self.x y = self.y excluded_feats = self.excluded_feats stratified = self.stratified num_folds = self.num_folds # Cross validation model if stratified: folds = StratifiedKFold(n_splits = num_folds, shuffle = True, random_state = 1001) else: folds = KFold(n_splits = num_folds, shuffle = True, random_state = 1001) oof_preds = np.zeros(df_train.shape[0]) feats = [f for f in df_train.columns if f not in excluded_feats] for n_fold, (train_idx, valid_idx) in enumerate(folds.split(df_train[feats], y)): X_train, y_train = df_train[feats].iloc[train_idx], y.iloc[train_idx] X_valid, y_valid = df_train[feats].iloc[valid_idx], y.iloc[valid_idx] clf = LGBMClassifier(objective = 'binary', reg_alpha = trial.suggest_loguniform('reg_alpha', 1e-4, 100.0), reg_lambda = trial.suggest_loguniform('reg_lambda', 1e-4, 100.0), num_leaves = trial.suggest_int('num_leaves', 10, 40), silent = True) # trainとvalidを指定し学習 clf.fit(X_train, y_train, eval_set = [(X_train, y_train), (X_valid, y_valid)], eval_metric = 'auc', verbose = 0, early_stopping_rounds = 200) oof_preds[valid_idx] = clf.predict_proba(X_valid, num_iteration = clf.best_iteration_)[:, 1] accuracy = roc_auc_score(y, oof_preds) return 1.0 - accuracy import lightgbm as lgb # 決定木を可視化 def display_tree(clf): print('Plotting tree with graphviz...') graph = lgb.create_tree_digraph(clf, tree_index=1, format='png', name='Tree', show_info=['split_gain','internal_weight','leaf_weight','internal_value','leaf_count']) graph.render(view=True) def load_model(num): clf = None file = "model" + str(num) + ".pickle" if os.path.exists(file): with open(file, mode='rb') as fp: clf = pickle.load(fp) return clf def save_model(num, clf): with open("model" + str(num) + ".pickle", mode='wb') as fp: pickle.dump(clf, fp, protocol=2) # Cross validation with KFold def cross_validation(df_train, y, df_test, excluded_feats, num_folds = 4, stratified = False, debug = False): print("Starting cross_validation. Train shape: {}, test shape: {}".format(df_train.shape, df_test.shape)) # Cross validation model if stratified: folds = StratifiedKFold(n_splits = num_folds, shuffle = True, random_state = 1001) else: folds = KFold(n_splits = num_folds, shuffle = True, random_state = 1001) # Create arrays and dataframes to store results oof_preds = np.zeros(df_train.shape[0]) sub_preds = np.zeros(df_test.shape[0]) df_feature_importance = pd.DataFrame() feats = [f for f in df_train.columns if f not in excluded_feats] for n_fold, (train_idx, valid_idx) in enumerate(folds.split(df_train[feats], y)): X_train, y_train = df_train[feats].iloc[train_idx], y.iloc[train_idx] X_valid, y_valid = df_train[feats].iloc[valid_idx], y.iloc[valid_idx] # LightGBM clf = LGBMClassifier(max_depth=6, num_leaves = 29) # trainとvalidを指定し学習 clf.fit(X_train, y_train, eval_set = [(X_train, y_train), (X_valid, y_valid)], eval_metric = "auc", verbose = 0, early_stopping_rounds = 200) oof_preds[valid_idx] = clf.predict_proba(X_valid, num_iteration = clf.best_iteration_)[:, 1] sub_preds = clf.predict_proba(df_test[feats], num_iteration = clf.best_iteration_)[:, 1] df_fold_importance = pd.DataFrame() df_fold_importance["feature"] = feats df_fold_importance["importance"] = clf.feature_importances_ df_fold_importance["fold"] = n_fold + 1 df_feature_importance = pd.concat([df_feature_importance, df_fold_importance], axis=0) save_model(n_fold, clf) del clf, X_train, y_train, X_valid, y_valid gc.collect() print('Full AUC score %.6f' % roc_auc_score(y, oof_preds)) #display_roc(y, oof_preds) display_importances(df_feature_importance) return sub_preds # Protraファイルの作成 def create_protra_dataset(code, value, value2, date, y_pred, flag): # 利益が高いと判定したものだけ残す y_pred = np.where(y_pred >= flag, True, False) s = "" s += " if ((int)Code == " + code + ")\n" s += " if ( \\\n" for i in range(len(y_pred)): if(y_pred[i]): (year, month, day) = date[i].split('/') s += "(Year == " + str(int(year)) + " && Month == " + str(int(month)) + " && Day == " + str(int(day)) + ") || \\\n" s += " (Year == 3000))\n" s += " return " + value +"\n" s += " else\n" s += " return " + value2 +"\n" s += " end\n" s += " end\n" return s def pred_load_model(clfs, df, stock_id, excluded_feats): n_splits = len(clfs) sub_preds = np.zeros(df.shape[0]) feats = [f for f in df.columns if f not in excluded_feats] for clf in clfs: sub_preds += clf.predict_proba(df[feats], num_iteration = clf.best_iteration_)[:, 1] / n_splits s = create_protra_dataset("1570", "1", "0", df["date"], sub_preds, 0.6) s = s + create_protra_dataset("1357", "0", "1", df["date"], sub_preds, 0.6) return s # 時価総額ランキングTop20 stock_names = [ "1001", ] # 日経255 updown_stock_names = [ "4151","4502","4503","4506","4507","4519","4523","4568","4578","3105","6479","6501", "6503","6504","6506","6645","6674","6701","6702","6703","6724","6752","6758","6762", "6770","6841","6857","6902","6952","6954","6971","6976","7735","7751","7752","8035", "7201","7202","7203","7205","7211","7261","7267","7269","7270","7272","4543","4902", "7731","7733","7762","9412","9432","9433","9437","9613","9984","8303","8304", "8306","8308","8309","8316","8331","8354","8355","8411","8253","8601","8604","8628", "8630","8725","8729","8750","8766","8795","1332","1333","2002","2269","2282","2501", "2502","2503","2531","2801","2802","2871","2914","3086","3099","3382","8028","8233", "8252","8267","9983","2413","2432","4324","4689","4704","4751","4755", "9602","9735","9766","1605","3101","3103","3401","3402","3861","3863","3405","3407", "4004","4005","4021","4042","4043","4061","4063","4183","4188","4208","4272","4452", "4631","4901","4911","6988","5019","5020","5101","5108","5201","5202","5214","5232", "5233","5301","5332","5333","5401","5406","5411","5541","3436","5703","5706","5707", "5711","5713","5714","5801","5802","5803","5901","2768","8001","8002","8015","8031", "8053","8058","1721","1801","1802","1803","1808","1812","1925","1928","1963","5631", "6103","6113","6301","6302","6305","6326","6361","6367","6471","6472","6473","7004", "7011","7013","7003","7012","7832","7911","7912","7951","3289","8801","8802","8804", "8830","9001","9005","9007","9008","9009","9020","9021","9022","9062","9064","9101", "9104","9107","9202","9301","9501","9502","9503","9531","9532", ] # 騰落レシオを作成 def get_up_down_ratio(skiprows, skipfooter): # 一つずつ pandaで読み込む df, val = read_protra_stock("1001", 0, 0) cols = ["date", "up1", "up5", "up25", "down1", "down5", "down25"] df_updown = pd.DataFrame(index=[], columns=cols) df_updown["date"] = df["date"] df_updown.fillna(0, inplace=True) # 初期化 for stock_id in updown_stock_names: df, val = read_protra_stock(stock_id, skiprows, skipfooter) # 1日、5日、25日前と比較して上昇しているかカウントする df_updown["up1"] = df_updown["up1"].where(df["close"].diff(1) >= 0, df_updown["up1"] + 1) df_updown["up5"] = df_updown["up5"].where(df["close"].diff(5) >= 0, df_updown["up5"] + 1) df_updown["up25"] = df_updown["up25"].where(df["close"].diff(25) >= 0, df_updown["up25"] + 1) df_updown["down1"] = df_updown["down1"].where(df["close"].diff(1) < 0, df_updown["down1"] + 1) df_updown["down5"] = df_updown["down5"].where(df["close"].diff(5) < 0, df_updown["down5"] + 1) df_updown["down25"] = df_updown["down25"].where(df["close"].diff(25) < 0, df_updown["down25"] + 1) display_overview(df_updown) # 騰落レシオ=(25)日間の値上がり銘柄数合計 ÷ (25)日間の下がり銘柄数合計 × 100 df_updown["updown1"] = df_updown["up1"] / df_updown["down1"] * 100 df_updown["updown5"] = df_updown["up5"] / df_updown["down5"] * 100 df_updown["updown25"] = df_updown["up25"] / df_updown["down25"] * 100 display_overview(df_updown) # 他の銘柄の合計数と加算する df_updown = df_updown.astype({'updown1': float, 'updown5': float, 'updown25': float, 'up1': float, 'up5': float, 'up25': float, 'down1': float, 'down5': float, 'down25': float}) return df_updown def main(df_train, df_test, stock_id): # 概要出力 #display_overview(df_train) # 学習モデル構築 df_test = df_test.drop("target", axis=1) df_train = df_train.dropna(subset=["target"]) # 正解データ・失敗データだけ利用する df_train = df_train[(df_train['target'] == 1) | (df_train['target'] == 0)] excluded_feats = ['target', 'date'] s = "" # 学習データが存在する場合 if (len(df_train)): if True: # 交差検証 y_pred = cross_validation(df_train, df_train['target'], df_test, excluded_feats, 2, True, True) print(y_pred) s = protra.create_protra_dataset(stock_id, df_test["date"], y_pred, 0.8) else: # ハイパーパラメータ探索 objective = Objective(x=df_train, y=df_train['target'], excluded_feats=excluded_feats, num_folds = 5, stratified = True) study = optuna.create_study(sampler = optuna.samplers.RandomSampler(seed = 0)) study.optimize(objective, n_trials = 50) return s # 結合版 if __name__ == '__main__': with timer("Up down ratio creation"): df_updown = get_up_down_ratio(0, 0) with timer("Data read"): df_train = pd.DataFrame() df_test = pd.DataFrame() for stock_id in stock_names: print(str(stock_id)) # CVを使っているのでTest用に一定数を未知のデータとする df, val = read_protra_stock(stock_id, 200, 200) df = pd.merge(df, df_updown, on='date') if not val: continue df = pre_processing(df) df_train = pd.concat([df_train, df]) #display_overview(df) # CVを使っているのでTest用に一定数を未知のデータとする df_test, val = read_protra_stock(stock_id, 0, 200) df_test = pd.merge(df_test, df_updown, on='date') if (val2): df_test = pd.merge(df_test, df2, on='date') #df_test = pd.concat([df_test, df]) #display_overview(df_test) # データ前処理 with timer("Cross validation"): df_test = pre_processing(df_test) display_overview(df_train) # closeの欠損値が含まれている行を削除 df_train = df_train.dropna(subset=["close"]) main(df_train, df_test, stock_id) s = "" with timer("start back test"): clf = [] for i in range(2): clf.append(load_model(i)) excluded_feats = ['target', 'date'] for stock_id in stock_names: df_test, val = read_protra_stock(stock_id, 0, 0) df_test = pd.merge(df_test, df_updown, on='date') df_test = pre_processing(df_test) #display_overview(df_test) s += pred_load_model(clf, df_test, stock_id, excluded_feats) with open(g_path + "\\lib\\LightGBM.pt", mode='w') as f: f.write(protra.merge_protra_dataset(s)) |